March 31, 2013

How to avoid runaway regression with Agile test automation

More and more businesses are adopting agile development models for development as well as maintenance – and it usually doesn’t take long before they all find themselves in the same situation: Runaway Regression. They see the burden of regression testing grow rapidly, quickly bypassing the amount of testing in new development. Soon they need to do daily builds, further increasing the need to run regression test suites more and more often. If you’re in this vicious cycle, how can you break it? The answer is automation: specifically, test automation.

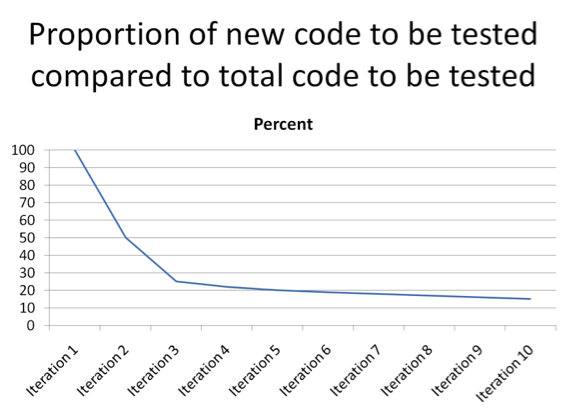

If you’re doing daily builds, you’re also doing regression tests every day, and with each new iteration, the number of regression tests increases from the previous round. If your development time is the same within each iteration, the proportion of newly developed code will fall to about 10 % of the total amount of code by the tenth iteration, but even by the second iteration, newly developed code will already be down to about 50 % of the total.

Avoiding runaway regression

If you expect your project team to have any hope of working on new development in the sprint, a large number of the regression tests you have running will have to be automated. If not, your team is going to be stuck spending every minute of their time on manually testing “old” functionality. In an ideal world, you want to save manual testing for features that are changed or newly-developed.

Automation in major agile development projects or in agile maintenance

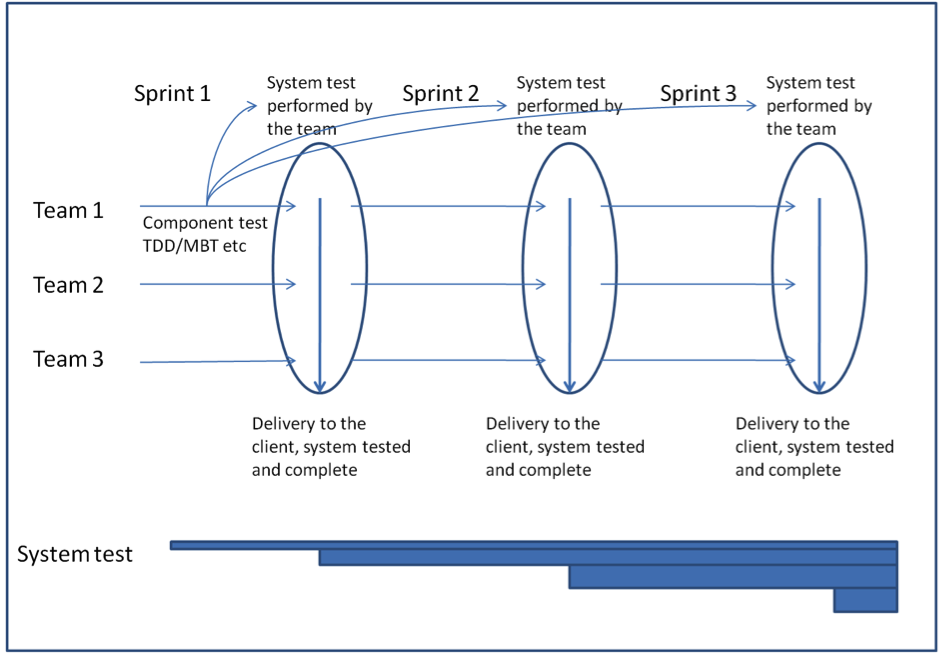

In every major agile project, you deliver an increasing amount of functionality over several iterations and even integrate work supplied by multiple parallel teams. It’s easy to use automated testing within each team, and there are lots of ways to go about it, including test-driven development (TDD) or model-based testing (MBT). Your team(s) can build on these in every iteration, in order to achieve “continuous integration” (CI), which is discussed in more detail later.

Even if each team is focusing on its own current and previous iterations, that doesn’t guarantee that all the teams’ increments will integrate flawlessly. When several teams are working in parallel, you need to take additional steps to ensure that you’ll be able to run a system test — ideally with as little additional effort as possible, since you still want manual testing to focus on newly developed features in the latest (and upcoming) iterations.

A simple way to handle this is by having the various development teams submit parts of their unit/component tests to a single team that focuses on system testing. You can view this team as an equivalent to the client, and any code the other teams deliver to them has to have been through the same readiness tests as if it were being delivered directly to the client.

Of course, you may even want the system test team to deliver the system directly to the actual client.

The system test team combines various tests into a context that resembles the real-world functioning of the system. As additional iterations add new functionality and/or change what’s already been delivered, the team adjusts the system tests based on new unit/component tests created by the development teams. The development teams can focus on coding and component testing the current iteration, knowing that system-wide testing is safely taken care of.

To ensure that one team in a particular iteration doesn’t break the build, you can reuse system tests from the previous iteration. This means that you can have system testing ongoing constantly (not just limiting it to a one-time event at the end of system integration or the iteration) while still meeting tight deadlines in a short iteration.

The difference between new agile projects and agile maintenance basically boils down to what constitutes the basis of the first automated tests. In a completely new project, that basis is the development team’s unit/component tests; but in maintenance, you will almost certainly have to start from some other basis.

When a larger system already exists and you’re going to begin further development on some of its functionality, the easiest way is usually to automate the system’s main workflows, which you already test during acceptance testing. It’s not realistic or cost-effective to try and retroactively automate every test to get full code coverage. On the other hand, it might be possible to automate the regression tests you’ve identified at a higher test level, which you might do with a recording tool.

You can combine the recorded regression tests with the tests that the development team delivers to the system test team, which adapts the recorded tests to include the new tests, so that the entire system is tested again.

Share article