May 18, 2016

The A to Z Guide to the Software Testing Process

This software testing guide is the next in-line topic to what we have discussed earlier. We’ve discussed a varied set of topics, and spent quite a bit of time discussing software development methodology – Agile, waterfall, Scrum, V-model, etc. And with good reason.

Over the years, I’ve noticed how process and methodology play an important role in project success—at times, following the right process is as important as having the right person for a job. You can hire the crème de la crème for your team, but it won’t matter much if they don’t have a robust process to govern themselves during delivery.

So I felt it is time to turn the spotlight on Testing – I am a Tester after all! Being as this is a refresher, let’s allow for some fundamentals to be emphasised, shall we?

What is Software Testing?

The internet defines Software Testing as the process of executing a program or application with the intent of identifying bugs. I like to define Testing as the process of validating that a piece of software meets its business and technical requirements. Testing is the primary avenue to check that the built product meets requirements adequately.

Whatever the methodology, you need to plan for adequate testing of your product. Testing helps you ensure that the end product works as expected, and helps avoid live defects that can cause financial, reputational and sometimes regulatory damage to your product/organisation.

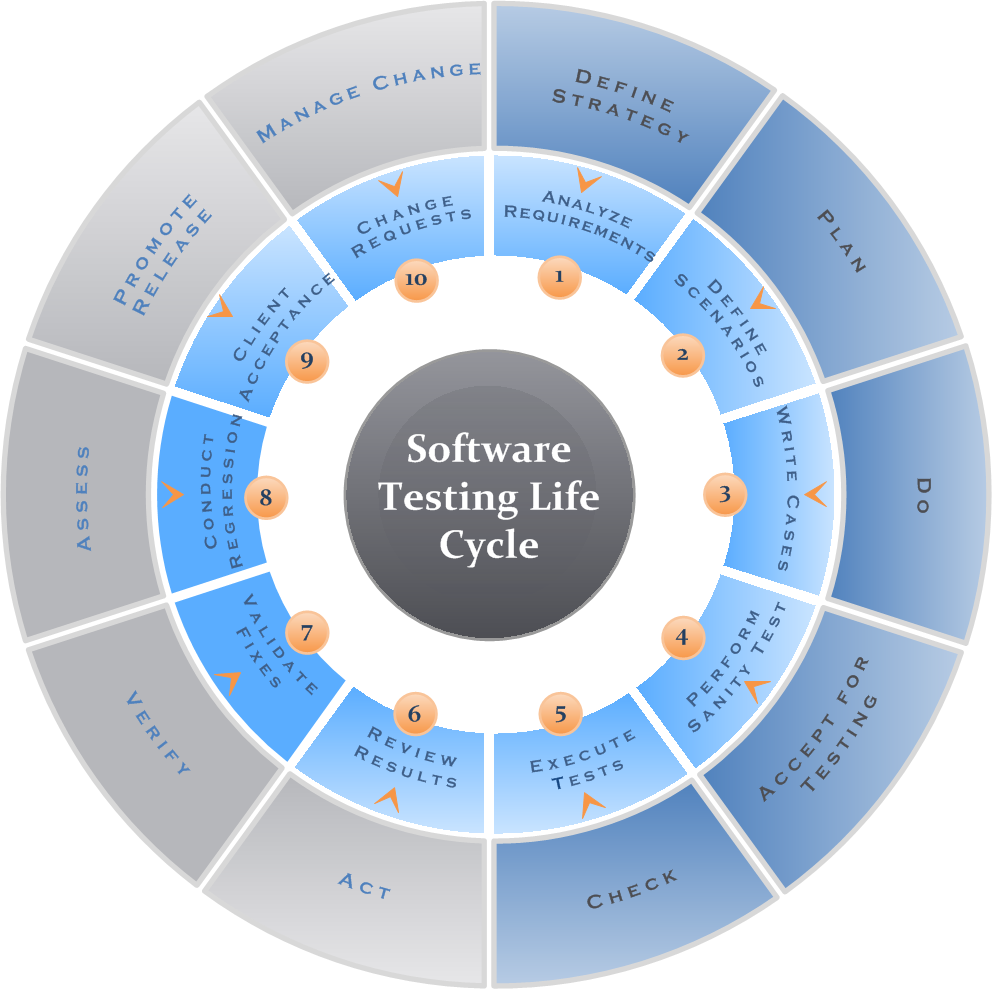

Figure 1 – Software Testing Life Cycle

Figure 1 – Software Testing Life Cycle

Who are the key parties involved?

Will you violently disagree if I say that everyone on a project is a key contributor? I can sense you nodding approval, so let’s go forward. Contrary to popular belief, a dedicated Testing phase alone isn’t sufficient to catch all the bugs with your product.

Testing needs to be a way of life, and be part of every conversation and task that a project team performs.

In this sense, everyone involved in a project is a key party. Developers do DIT, Product owners review copy and do hands on testing, BAs are constantly reviewing requirements, Project managers and Scrum masters regularly review plans to re-align priorities and extract best value. In their own way, everyone is testing all the time. As they should.

To bring it all together, you have the Test Manager and Test Leads/Coordinators, Project Manager/Scrum Master, Project Sponsor/Product Owner, and Business Analyst overseeing the Test phases of a project – with the support of Development Leads, Testers, Architects, and other support teams (like the Environments team).

Software Testing Process

Agile or Waterfall, Scrum or RUP, traditional or exploratory, there is a fundamental process to software testing. Let’s take a look at the components that make up the whole.

#1: Test Strategy and Test Plan

Every project needs a Test Strategy and a Test Plan. These artefacts describe the scope for testing for a project:

- The systems that need to be tested, and any specific configurations

- Features and functions that are the focus of the project

- Non-functional requirements

- Test approach—traditional, exploratory, automation, etc.—or a mix

- Key processes to follow – for defects resolution, defects triage

- Tools—for logging defects, for test case scripting, for traceability

- Documentation to refer, and to produce as output

- Test environment requirements and setup

- Risks, dependencies and contingencies

- Test Schedule

- Approval workflows

- Entry/Exit criteria

And so on… Whatever methodology your project follows, you need to have a Test Strategy and Software Testing Plan in place. Make them two separate documents, or merge them into one.

Without a clear test strategy and a detailed test plan, even Agile projects will find it difficult to be productive. Why, you ask? Well, the act of creating a strategy and plan bring out a number of dependencies that you may not think of otherwise.

For example, if you’re building a mobile app, a test strategy will help you articulate what Operating Systems (iOS/Android), OS versions (iOS 7 onwards, Android 4.4 onwards etc.), devices (last three generations of each type of iOS device, specific models of Android devices) you need to test the app for.

Usually, a functioning organisation will have nailed their device and OS support strategy, and review it quarterly to keep up with the market; test managers creating a strategy or plan for their project will help validate the enterprise-wide strategy against project-specific deliverables.

You’d be surprised how many projects have to alter their plan significantly because they hadn’t thought enough about support strategy early on. Among other things, the test plan also helps define entry and exit criteria for testing. This is important as a control for the rest of the team. If the deliverables aren’t of a specific level of quality, they won’t enter testing; similarly, if the tested code doesn’t meet specific quality standards, the code will not move to the next phase or enter production.

Testing performs this all-important gatekeeping function, and helps bring visibility to any issues that may be brushed under the carpet otherwise.

#2: Test Design

Now that you have a strategy and a plan, the next step is to dive into creating a test suite. A test suite is a collection of test cases that are necessary to validate the system being built, against its original requirements.

Test design as a process is an amalgamation of the Test Manager’s experience of similar projects over the years, testers’ knowledge of the system/functionality being tested and prevailing practices in testing at any given point. For instance, if you work for a company in the early stages of a new product development, your focus will be on uncovering major bugs with the alpha/beta versions of your software, and less on making the software completely bug-proof.

The product may not yet have hit the critical “star” or “cash cow” stages of its existence—it’s still a question mark. And you probably have investors backing you, or another product of your own that is subsidising this new initiative until it can break even. Here, you’re trying to make significant strides—more like giant leaps—with your product before you’re happy to unwrap it in front of customers. Therefore, you’re less worried about superficial aspects like look and feel, and more worried about fundamental functionality that sets your product apart from your competitors.

In such a scenario, you may use lesser negative testing and more exploratory or disruptive testing to weed out complex, critical bugs. And you may want to leave out the more rigorous testing to until you have a viable product in hand. So your test suite at the beginning of the product lifecycle will be tuned towards testing fundamentals until you’re close to release.

When you are happy to release a version to your customers, you’ll want to employ more scientific testing to make it as bug-free as possible to improve customer experience. On the other hand, if you’re testing an established product or system, then you probably already have a stable test suite. You then review the core test suite against individual project requirements to identify any gaps that need additional test cases.

With good case management practices, you can build a test bank of the highest quality that helps your team significantly reduce planning and design efforts.

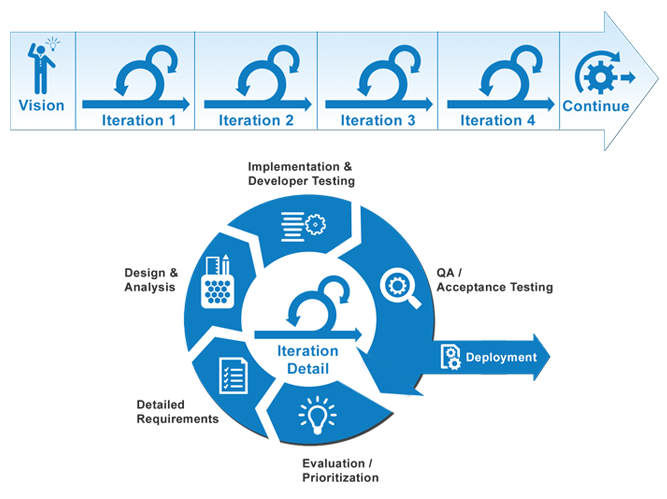

Figure 2 – Agile Testing Life Cycle

#3: Test Execution

You can execute tests in many different ways—as single, waterfall SIT (System Integration Test) and UAT (User Acceptance Test) phases; as part of Agile sprints; supplemented with exploratory tests; or with test-driven development. Ultimately, you need to do adequate amount of software testing to ensure your system is (relatively) bug-free.

Let’s set methodology aside for a second, and focus on how you can clock adequate testing. Let’s go back to the example of building a mobile app that can be supported across operating systems, OS versions, devices. The most important question that will guide your test efforts is “what is my test environment?”.

You need to understand your test environment requirements clearly to be able to decide your testing strategy. For instance, does your app depend on integration with a core system back end to display information and notifications to customers? If yes, your test environment needs to provide back end integration to support meaningful functional tests.

Can you commission such an end-to-end environment to be built and ready for your sprints to begin? Depending on how your IT organisation is set up, maybe not. This is where the question of agile vs a more flexible approach comes into picture. Could you have foreseen this necessity way before the sprints began? Probably not.

Given how Agile projects are run, you may only have a couple of weeks between initiating a project and starting delivery sprints, which time isn’t enough to commission an end-to-end test environment if one doesn’t already exist. If everything goes fine, you’ll have a test environment to your liking, configured to support your project, with all enablers built to specifications. If not, then your test strategy will be different.

In this example, we’re talking about doing front-end tests with dummy back end to support in-sprint testing, and wait until an integrated test environment is ready. It is common practice to schedule integration tests just after delivery sprints and before release. Your team can then run a dedicated System Integration Test, focusing on how the app components work with the back end to deliver the required functionality.So while app-specific bugs will primarily be reported during the sprints, functional end-to-end bugs will crop up during the integration test. You can follow this up with a UAT cycle to put finishing touches in terms of look and feel, copy, etc.How your team execute test cycles depends on the enabling infrastructure, project and team structure in your organisation.

Reviewing test environment requirements early on is now a widely recognised cornerstone for good project management. Leaders are giving permanent, duplicate test environments a good deal of thought as an enabler for delivery at pace.

#4: Test Closure

Right—so you have done the planning necessary, executed tests and now want to green-light your product for release. You need to consider the exit criteria for signalling completion of the test cycle and readiness for a release. Let’s look at the components of exit criteria in general:

- 100% requirements coverage: all business and technical requirements have to be covered by testing.

- Minimum % pass rate: targeting 90% of all test cases to be passed is best practice.

- All critical defects to be fixed: self-explanatory. They are critical for a reason.

As a rule of thumb, I’ve seen projects mandate 90% pass rate and all critical defects being fixed before the team can move on to the next phase of the project. And on big transformation initiatives, I’ve seen individual releases move to the next phase (to aid beta pilots) with as little as 80%, with the understanding that the product won’t reach the customer until mandatory exit criteria are met. Ultimately, what works for your team is down to your circumstances and business demands.

Remember that nobody can afford serious defects to remain unfixed when you launch to customers—especially if your product handles sensitive information or financials.

Polish things off with a Test Summary and Defects analysis: providing stats about testing – how many high/medium/low defects, which functions/features were affected, where were defects concentrated the most, approaches used to resolve defects (defer vs fix), Traceability Matrix to demonstrate requirements coverage.

Before we go – The Importance of Non-functional Requirements Testing

A lot has been said about the importance of NFRs and how any good project needs to review non-functional requirements alongside functional requirements to be effective and successful.

I have written enough about NFRs in the past so I’m not going to repeat the detail here. What I will emphasise, however, is the importance of understand the testing requirements for your NFRs at the beginning of your project.

A lot of the NFR tests are technical in nature, and need specific deliverables – performant code, additional hardware, accessibility rules etc. Reviewing these alongside functional requirements will help your team identify additional testing needs (such as multi-language support for your app), as well as plan for such tests well in advance so there are no surprises, such as ugly UAT bugs or legal/compliance concerns late in the project. Test plans should include NFR review as a line item.

Encourage your team to think of NFR tests as not an additional task but rather a basic one that they need to deliver.

Recap

As I said at the beginning, the methodology you follow doesn’t preclude any of the above process steps.

In fact, on projects running Agile and related methodologies, diligently following testing principles and documentation will bring much-needed structure to how your team works. Testing is a fundamental and crucial part of any software project.

Plan for testing adequately, and reap the benefits of delivering a bug-free product first time, every time.

I hope this blog provided a refresher, and that you found the resources useful! Share widely if we helped you in a small way, so others may benefit as well. Leave your comments below and have a healthy discussion about the software testing process.

Share article