April 19, 2016

5 Things About Compatibility Testing You Need to Know Today

Of late, there is a lot of emphasis on building software that support wide-range compatibility. And rightfully so. In an increasingly low margin software business, you need to be able to deliver products that can work on multiple platforms, and be relevant to a wide range of customers. This is witnessed by how almost every app maker in the market builds support for both Android and iOS as a basic feature.

I’m sure you agree with me when I say that compatibility is one of the most significant determinants of success for an IT product. So much so, that I felt the need to dedicate a blog about the topic. In this post, I’ll cover what I believe are the key things to know about Compatibility.

Quick Refresher – What is Compatibility Testing?

Before we begin, let’s jog our memory a bit.

Compatibility is one of the many Non-Functional Requirements (NFRs) that are delivered as part of a project. Compatibility Testing is the means to confirm that compatibility requirements have been delivered.

A quick internet search will tell you that Compatibility Testing covers the following about the intended operating environment for a software product:

- Hardware compatibility

- Network/bandwidth/carrier compatibility

- Compatibility with different Operating Systems and databases

- Peripheral devices, systems, software

- User Experience

For instance, when Apple release a new version of iOS, they conduct rigorous testing to check that the update works on all supported iOS devices (iPhones, iPads, iPods), device configurations (e.g. the most recent update may only support models from iPhone 5 onwards), carrier networks/bands etc. This is compatibility testing.

Compatibility testing helps your team deliver a software product that works seamlessly across all configurations of its intended computing environments, providing consistent experience and performance across platforms and to (well, almost) all users.

Let’s take a look at the five things you need to know about Compatibility Testing today:

#1 – Compatibility testing is necessary

First things first – you need to plan for and execute Compatibility testing. It adds one more test cycle at the end of your project (or additional scope to your sprints), and needs time, money and resource. That said, Compatibility Testing is mandatory. It represents the best chance to properly evaluate whether your product works seamlessly in its intended operating environments.

#2 – Compatibility is one of the last things that a project team thinks about – and that is not a good thing

In general, this is true for NFRs. So many projects fail simply because they didn’t discuss NFRs in tandem with functional requirements. Scrambling to deliver NFRs close to the delivery date is a usual, and unwelcome, sight. I cannot emphasize the importance of NFRs enough – these are the enablers for your product, and will be the difference between success and failure.

Specifically, you need to consider upfront the compatibility requirements and the testing necessary to confirm these requirements have been met.

Why? So you can ensure your scope is tuned to delivering a product that actually works with the intended hardware, networks, peripheral systems, users.

For example, I was brought in to help recalibrate a large and complex mobile project for a bank, where the project team hadn’t considered whether the current messaging infrastructure was tuned to deliver push notifications. This was due in large part to the infrastructure in question being hosted and run by a different IT department that reported to a different manager, who had differing priorities. As I write this, I am reminded how silly we all felt it was for the project team to not have discussed compatibility of their mobile app features with the backend infrastructure that enables it.

You’d be surprised at how many such compatibility issues crop up very late on large (and therefore, important) projects. Reviewing compatibility requirements (and NFRs in general) during functional requirements discussions will help you plan for Compatibility Testing better.

You can build Compatibility tests into upstream Test Cycles, or Sprints – or Not

Agile or not, your project needs to execute compatibility tests – how you plan the tests in is down to your experience, your team’s preference for methodology, and other factors.

If you’re using scrum, it is good practice to build relevant compatibility tests into the testing scope for your sprints. This also means you need to plan ahead and set up relevant infrastructure necessary to aid in-sprint compatibility testing. To some extent, this works.

I say ‘to some extent’. That is because, how much compatibility testing you can cover within sprints is directly influenced by the scope for your scrum. Let me explain with an example.

If you’re working on a project that will deliver redesigned UI for your company’s website using existing infrastructure, your scope for compatibility testing could be the following:

- Browser compatibility, OS compatibility and User experience – to check that the web pages are ‘responsive’, and render accurately and consistently across a number of browsers (e.g. IE, Safari, Chrome and Firefox – on Mac, Windows, iOS and Android).

- Hardware and network compatibility – to check that intended hardware and networks that the web pages will run on, are able to handle the processing required.

- Peripheral systems compatibility – to check that the new pages can interact with existing back-end infrastructure efficiently.

There may be other elements, but you get the point. Now let’s think about the scope for a scrum team that is delivering the web pages. It’s quite possible that when the sprints begin, you are able to arrange a test environment for your team that allows them to plan all compatibility tests relevant to the sprint’s scope. Then power to you!

Then again, it’s quite possible that you may not be able to secure your team the complete testing environment necessary to conduct all relevant compatibility tests within each sprint. On projects that are slightly more complex than just redesigning web pages, it’s quite often the latter.

So depending on your project, organization, time, cost and other constraints, you can choose to build compatibility testing into regular test effort, or make it a standalone cycle. Or both.

It is impractical, and unnecessary, to target absolute coverage

Let’s continue with the example of redesigning a website. Some of your customers may be using a much older version of Internet Explorer – say v9. Your test strategy needs to ensure you’re testing your product to cover almost all your customers. Note that I italicized and underlined “almost”. You need to think carefully about testing for outliers.

Where a very small minority of your customers are still using older and unsupported versions of IE and experience difficulties in using your website as a result, a simpler and cost-effective approach could be to allow your helpdesk explain to them that any issues will be sorted once they upgrade to the latest IE version.

You can also make policies about browser compatibility and post these prominently on your website. A good practice is to run a compatibility check when your website loads on the customer’s browser, and where it is an unsupported version or browser, to display a prominent message and calls to action for the customer to upgrade or use a different browser:

A step further would be to block a customer out until they upgrade to a supported version:

This second scenario is necessary when you know your product won’t work well with the unsupported browser version.

Optimize your strategy to include only versions that account for – say – 95% to 98% of your customer base. Support systems like a help desk will be able to manage the unsupported 2% to 5% at a fraction of the cost and time you would need to continue supporting older configurations.

Forward and Backward compatibility are achievable, but not 100%

You want to build for the future – yes, we all want to build a website, operating system, device, software that can go on and on for years without needing an upgrade or replacement. Take mobile apps for instance – there are numerous examples of seemingly transcendent apps that felt their customers won’t tire of using them.

We all know where Angry Birds and Temple Run are today – I can’t remember the last time I actually saw anybody play Angry Birds on my commute to work.

This points to a simple truth: while building for the future is a good thing, your investment in a product’s upkeep should always consider a key aspect: the product’s demise. If you’re still walking around with a mobile device from five years ago, you’re going to find the current generation of most mobile apps don’t support the device and OS you’re running. Because, you’re probably part of the 1% that still use a phone from the stone age (yes it is, in smartphone terms).

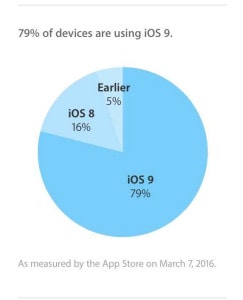

When it comes to compatibility, plan to support the past and next two generations of your computing environment at most. And tune your testing efforts towards just this goal. Good manufacturers are great with sign-posting when older devices and operating systems are due to go out of support. For instance, there is no point in trying to support the less than 5% of your customers that are still using iOS 7, 6 or 5:

In summary, ensuring compatibility of your product with its operating environment will reap rich benefits. It is a necessary and key part of your project life cycle. Encourage your team to review compatibility testing needs early on in your projects, and to optimize the scope for such testing to avoid over or under testing.

Do you have your own experiences to share about Compatibility Testing? Share your comments below, and let’s have a healthy discussion.

Share article