March 3, 2016

10 of the Worst Requirements I have Ever Seen

How hard is it to write good requirements documentation? In this blog post I share the 10 of the worst and bad possible examples of requirements documentation, which haunt me to this day.

Introduction

Writing requirements is one of the most important aspects of product development since so many team members will depend on these lists to carry out their jobs properly.

It follows therefore, that requirements writing should be of high quality indeed, and that poor documentation will negatively impact the team’s performance.

In this article, I take a look at the 10 worst requirements that I’ve come across during my years of experience.

#1 – “The system must have good usability”

#2 – “Response time should be less than X seconds”

#3 – “Round-the-clock availability”

#4 – “The system shall work just like the previous one, but on a new platform”

#5 – “The system has to be bug-free”

#6 – “Reporting”

#7 – “Make it accessible”

#8 – “X cannot change”

#9 – “Easy to use”

#10 – “It has to be robust”

#1 – “The system must have good usability”

This one gets me every time.

Of course, a system should have good usability!

Every tester and developer knows that. But to whom does it have to ‘feel good’ to?

Describe the user group(s) and the knowledge expected from them.

A common theme in this list of cringe-inducing requirements is their vagueness and lack of objective criteria.

Examples of measurable criteria are the time to complete a specified action.

A better way to express this requirement is: “A customer service rep should be able to enter 3 issues in less than 15 minutes”. This is highly measurable.

Another way to get usability measurable is to set standards.

If there is a documented company standard, you can state that the system should be built according to the standard.

Of course, a standard stating that the OK button should be placed to the right of the Cancel button does not automatically mean that the system gets high usability, and the only way to really know if the system is okay to perform usability tests.

#2 – “Response time should be less than X seconds”

Fair enough, we all want our software to be blazingly fast. But under which conditions exactly are you expecting a two-second response time?

Is this figure -taking into consideration natural variances in the response time of the system, and does it refer to a particular functionality of the product or does the PO expect a two-second response time across the board, even for critical parts of the system?

This type of requirement is doubly devious because it is cleverly disguised by the inclusion of an objective amount which gives it the appearance of legitimacy. But probe a little bit deeper and the requirement breaks down under the weight of its absurdity.

Any measurement should be given in a particular context. For example, the search functionality, or saving a new customer to the database. You also must state under what circumstances it should be measured, for example on a standardized desktop within the firewall or via ADSL on a slower computer.

Also consider natural variances in the system, for instance, on salary payment day many banks are overloaded. Do you have variances on other dates, for instance upon beginning of a new month or new year?

“[Response time] is doubly devious because it is cleverly disguised by the inclusion of an objective amount which gives it the appearance of legitimacy.”

#3 – “Round-the-clock availability”

Say what? You mean 24/7/365 support? And who’ll foot the bill exactly?

Neglecting the time, money and energy costs that go in the development and testing of the client’s requirements is a serious mistake that leads to certain disaster.

In these cases, the team has to take on the role of advisor and gently make the client aware of any obvious problems in their requirements.

In some situations, 24/7/365 is a reasonable requirement, for instance when it comes to internet banks. Often, however, this requirement is too costly to be considered realistic.

You will either have to understand if this requirement is truly that important or reach a compromise with the stakeholder.

Reaching a compromise on the stakeholder’s original demands and ‘optimizing’ (a useful phrase to use during negotiation!) their requirements to fit the project’s scope and budget better will save the team a lot of hassle in the long run.

#4 – “The system shall work just like the previous one, but on a new platform”

This is a classic mistake.

What is usually meant is “but don’t implement ‘these features’ since we do not use them anymore.” And “we trust you also take into account all the undocumented complaints that we have had over the years about some of the features that we hate.”

When rebuilding a system with other techniques, you must do proper requirements management again, since needs have changed. A new platform also comes with pros and cons, which have to be considered. Features that worked in one way earlier will not work exactly the same way when the platform is changed.

A cheaper solution would be to create a quick prototype of the system using the new technology.

Perform workshops and behavioral studies on real users to find out the gaps between the prototype and the final product. This is also a good way to elaborate on new features and possibly constrains that come with the new platform.

#5 – “The system has to be bug-free”

This shows an immature way of looking at quality assurance and involvement from both customer and supplier.

You have to establish a proper change management process and a testing process that involves both parties with clear responsibilities early on.

Other things that often have to be discussed in immature projects are documentation, help system, and end-user training.

“Establish a proper change management process and a testing process that involves both parties with clear responsibilities early on.”

#6 – “Reporting”

Crunching through complex data and returning actionable insights, preferably with plenty of snazzy visualizations that highlight trends and patterns in a system, is one of the most important functions of a software no matter in which industry it is implemented.

Which explains why everyone requesting new software for their business comes up to you asking for the ability to “create reports”.

Like most of the bad requirements we already tackled here, the problem here isn’t what’s being requested but, rather, what’s being omitted from the requested. There is no indication of how exhaustive a report should be, which metrics should be included in it and who is authorized to generate and read them.

#7 – “Make it accessible”

The last point leads us neatly to our next nightmare of a requirement.

Accessibility can be wide or restricted, but in each case a clear profile of the type of users that will be allowed to interact with the system is needed in order to write relevant test cases for the scenarios likely to be encountered.

Moreover, accessibility doesn’t necessarily exist in a binary yes/no state. Even as the doors as flung wide open for everyone to interact with the system, some people may have certain privileges that others don’t have.

This graduated accessibility is tightly linked with the roles played by different classes of users, which in turn affects the actions they are authorized to carry out.

#8 – “X cannot change”

Expanding on the idea of different levels privileges available for different users leads me to consider another howler that sends teams in a fit of despair.

Whenever clients come up to you insisting that certain aspects of the system cannot change, your prompt rebuttal should be along the lines of: “Who cannot change X?”.

More often than not, you’ll discover that their original request was actually a shorthand for the fact that certain users cannot change certain aspects of the system without permission from a supervisor or other users with high-level credentials.

“More often than not, you’ll discover that the original requirement is a shorthand for the truth.”

#9 – “Easy to use”

This tendency for clients to use shorthand language when communicating requirements really point out the main cause of horrible requirements actually existing at all.

I’m in totally agreement with those testers who have explained on a variety of internet fora that poor requirements are actually miscommunicated requirements.

Our job then is to help bring clarity and practical relevance to what our clients tell us by probing intelligently the reasons behind their statements.

Making software “easy to use” is a common requirement that requires expanding upon to implement it in practice.

- What makes a software easy according to the client?

- Is it having a short training time for end-users to master the finished product?

- How important is this for the client and the company they represent?

These questions all help shed light on the relevant priority of a requirement, which otherwise would be just another of those standard requests you get all the time.

#10 – “It has to be robust”

Finally, rounding up our list of horrible requirements, is this gem of a statement.

Again, the problem here isn’t the requirement per se. Robust software is indeed a very desirable thing to have, but there is no quantitative element in that statement to align the tester’s perception with the client’s desired outcome.

Translating robustness into the metrics that are generally used to give an indication of this quality is a quick and simple way to beef up the information provided by the client.

In this case, inquiring about the target time to restart after failure, for example, helps anchor the software with the client’s practical needs.

“There needs to be a quantitative element that aligns the tester’s perception with the client’s desired outcome.”

Concluding remarks

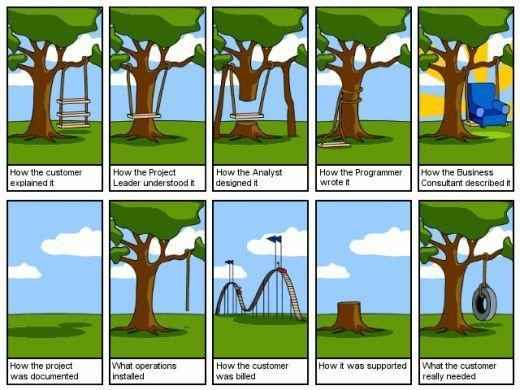

I find the image below hilariously sums up the state of communication between the parties involved in software development and testing.

We all have a part to play to improve communication within teams and with clients.

As mentioned above, we should be willing to take up the responsibility of helping our clients define more properly their software requirements and tease out the information the team needs to produce quality software that delivers on the client’s wishes.

Recap

- A common quality of cringe-inducing requirements is their vagueness and lack of objective criteria.

- Any measurement should be given in a particular context.

- When rebuilding a system with other techniques, you must do proper requirements management again, since needs have changed.

- Establish a proper change management process and a testing process that involves both parties with clear responsibilities early on.

- More often than not, you’ll discover that the original requirement is a shorthand for the truth.

- There needs to be a quantitative element that aligns the tester’s perception with the client’s desired outcome.

What’s Next

How many of these 10 worst requirements have you encountered in your work?

Tell us your stories of spine-chilling requirements in the comments section.

Finally, share this article and help more requirements professionals sleep soundly at night!

Share article