March 10, 2015

Why writing test cases is like writing code

The divide between testing and developing has long been taken as an absolute by members of both professions. However, closer inspection can help us debunk this idea, to an extent, by revealing instances where writing test cases and writing code appear to follow very similar principles.

Test cases may not look like code, but in some important ways they do behave like code. Thus, certain rules and principles which developers follow when writing code can easily be adopted by testers when writing test cases.

Both test cases and code consist of written information, to which changes and additions accumulate over time. If the changes are small, chances are they get applied through a series of small tweaks and side clauses, so that after a while the whole thing looks like an illicit power cable hookup tangle.

Just like with code, test cases are vulnerable to “rotting” even when you don’t add to them.

Software rot or code rot is what developers call (only half in jest) the fact that software appears to degrade over time even though “nothing has changed”.

In fact, the world around it has moved forward, as it always does. The business expectations have gradually changed, technologies have evolved, server environments upgraded, etc. But since code has not been updated, it is no longer in tune with the world around it, and appears to be degraded.

In the world of software development, an important tool in the fight against tangled and degraded code is that of refactoring.

Refactoring means restructuring existing code so that it shows the same external behaviour, but has higher internal code quality, in other words detangling the power cables while still keeping the power flowing to the same houses, to stick to the same analogy.

This concept can also be applied to test cases. I will explain with the help of one of the most common refactoring methods from the software development realm: the extract method.

The Extract Method

Test cases and code tend to grow bigger and bigger as time passes.

A feature starts out simple, and you write a small and simple test case for it. But then a new business rule is added and the rule gets added to the original code or test case. A bug uncovers an edge case and it gets added to the rest, and so on and so forth.

In code, this leads to methods growing longer and longer, while in testing it’s often the test cases that grow. If you don’t watch out, you can end up with a single test case which tries to cover all aspects of the feature.

Obviously this is not optimal, because:

- It makes the test case and test run hard to review, because it is hard to see what is covered and what is not.

- It makes the test case hard to edit, because any new step needs to consider the effects of all previous steps.

- It makes for inefficient testing, because as soon as one part needs re-testing, everything gets tested in many times.

When this happens in code, we break up the large method into smaller ones by extracting parts of the original, long method into new methods. We aim for small methods with a clear purpose and a clear name.

The same can and should be applied to writing test cases. Aim to write smaller test cases with a clear purpose and a clear name.

A well-planned test run can be read like a table of contents of what is to be covered. Each test case should correspond to a specific expectation, something we want the system to do for the user.

Taking a fictional user interface component as an example, a test run for that component should not have a single test case called the component. Instead, it should ideally have a number of test cases titled:

- Component shows correct xyzzy data;

- Component remembers user choices;

- Component renders correctly;

- Links in component go to correct xyzzy page.

Adding on Variations

As variations get added, code as well as test cases become more repetitive. For example:

You start out with Test x, y and z.

Then a new setting is added, so now your test case changes to:

Toggle the new setting on. Test x, y and z.

Then another variation is added, and you repeat the same instructions over and over again. Or perhaps you need to test the same rule for a number of different components in the system, etc.

In any case, you end up with repetition.

In the coding realm, the solution is to extract the repeated code into a separate method again. In testing, the solution can often be the same: move the repeated steps into a separate test case.

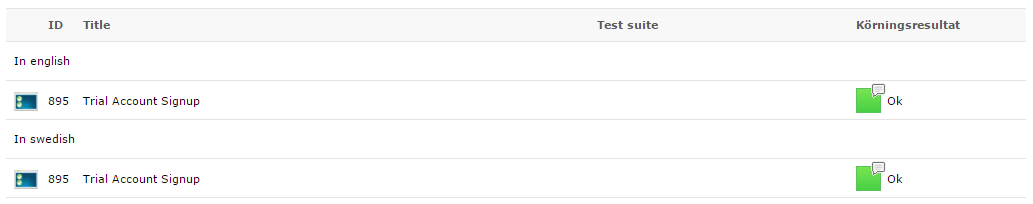

In our team we often solve this using instructions in test runs. Here’s an example of a test case that needs to be tested in two languages:

As you can see in the screenshot, instead of rolling up the two test cases into one, we’ve extracted the instructions for the Swedish language trial account signups and placed them in a separate test case from the English language version.

This avoids having unnecessary repetition in one test case and minimises the risk of mistakes being committed when a tester performs the same instructions over and over again, and starts lowering his or her guard as they get used to it.

Share article